Machine learning (ML) models are being increasingly used in different fields, such as financial services (e.g. stock price prediction), law (e.g. case summarization), and even medicine (e.g. drug development). Although ML models are very good at making predictions, they lack in explaining their forecasts so that humans can easily understand how the model made a certain prediction.

ML models are complex to understand to the point that they can make conclusions based on a huge amount of features and they make tons of calculations that it is hard for researchers or developers to understand why and how the algorithm is making certain predictions [1]. For a long time, researchers tried to explain their models based on judgment, experience, or observation.

With the advance of science, now it is possible to determine how ML models arrived at certain predictions. This ability to understand how ML makes predictions is called interpretability. Machine learning interpretability is becoming very popular for many reasons. First, in some fields, the decisions of algorithms must be explained due to laws or regulations. Second, interpretability is essential for debugging an algorithm or identifying embedded bias. Finally, it helps researchers and developers to measure the trade-offs of an ML model.

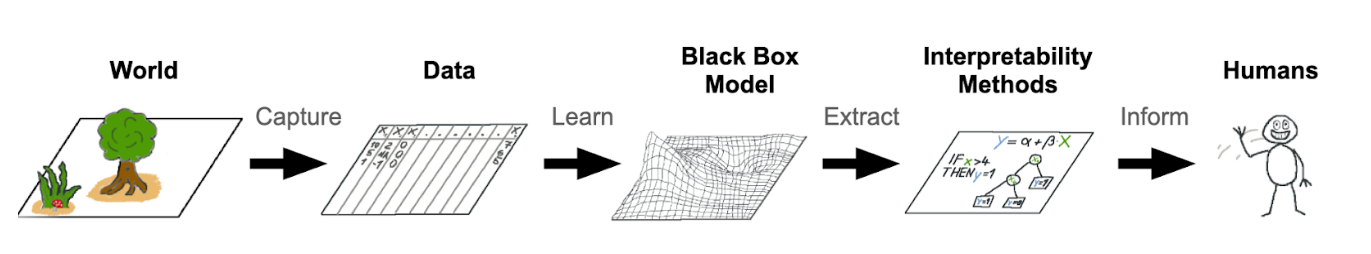

Figure 1 presents a high-level look at model agnostic interpretability. First, we capture the world by collecting data, and we abstract it by learning to predict the data with a machine learning model. Then, interpretability helps humans understand the model (a black box) by using a simpler and more interpretable model [3].

Figure 1: Model-agnostic interpretability. Image extracted from [3].

How to implement interpretability?

Google has a very interesting paper called "The Building Blocks of Interpretability" in which the paper presents the challenges to make machine learning models interpretable.

In deep learning models, the "knowledge" is created by hidden layers. It is still a challenge to understand the functionality of the different hidden layers and understanding them is essential to interpret a model.

Segmenting and understanding how a group of interconnected neurons in a neural network work will provide a simpler level of abstraction to understand its functionality.

To better interpret neural networks, it is essential to understand how they form individual concepts and how they assemble them into the final output.

When don't you need interpretability?

Basically, not everything requires interpretability. Building models with interpretability is an investment of time and money. So, when you don't need to build interpretable models? First, if the problem is well studied, you can get lots of training data, your dataset is balanced and you can rely on good performance [3]. Second, when interpretability does not impact the end customer or user.

Open source libraries and tools for building interpretable machine learning models

Python libraries:

1.ELI5 ("Explain like I am a 5-year old") - The 5 refers to a five-year-old child, the implication being that the person requesting the explanation has a limited or naive understanding of the model.

2. LIME (Local Interpretable Model-Agnostic Explanation)is able to explain any black-box classifier, with two or more classes.

3. SHAP (SHapley Additive exPlanations)is a game-theoretic approach to explain the output of any machine learning model.

Alibi focus is to provide high-quality implementations of black-box, white-box, local, and global explanation methods for classification and regression models.

Another open source tool:

- AI Explainability 360 is a new toolkit that was recently open-sourced by IBM. The toolkit provides state-of-the-art algorithms that support the interpretability and explainability of machine learning models.

About the author:

Isabella Ferreira is an Ambassador at TARS Foundation, a cloud-native open-source microservice foundation under the Linux Foundation.

References:

[1] https://www.twosigma.com/articles/interpretability-methods-in-machine-learning-a-brief-survey/

[2] https://neptune.ai/blog/explainability-auditability-ml-definitions-techniques-tools

[3] https://www.analyticsvidhya.com/blog/2019/08/decoding-black-box-step-by-step-guide-interpretable-machine-learning-models-python/?utm_source=blog&utm_medium=6-python-libraries-interpret-machine-learning-models

About the TARS Foundation

The TARS Foundation is a nonprofit, open source microservice foundation under the Linux Foundation umbrella to support the rapid growth of contributions and membership for a community focused on building an open microservices platform. It focuses on open source technology that helps businesses embrace microservices architecture as they innovate into new areas and scale their applications. For more information, please visit tarscloud.org